What we'll talk about

Imagine this scenario:

You have a product with well-documented features but a high volume of support inquiries. Most of these inquiries are questions already addressed in the documentation. Due to the volume, you’ve had to hire multiple support agents just to keep up.

Now imagine implementing a solution that can read your entire documentation and answer customer messages based on that information. How amazing would that be?

That's exactly what we’re going to build today. In this article, we’ll learn how to create an AI-powered chat from scratch. By the end of this reading, you’ll have a complete API capable of registering text materials to automatically answer questions.

What you should know first

- Required: Fluency in NodeJS

- Required: Knowledge in Docker

Introduction

The technical name of what we're about to build is RAG, that stands for "Retrieval Augmented Generation". A RAG application combines external information sources, like documents, with an LLM to build the adequate answer for given query.

In our project the RAG will use pre-imported text inputs as information source to answer queries.

LLM, or Large Language Model, is a type of artificial intelligence model designed to understand and generate human language.

Given the definitions above, we can assume that we need at least two infra components: an information source and an LLM.

Chroma

According to the documentation, Chroma is a AI-native open-source vector database. We'll use Chroma as our external information source, as mentioned earlier.

The key difference here is that, unlike databases like PostgreSQL, which uses tables, or MongoDB, which uses documents to store data, Chroma stores data as vectors.

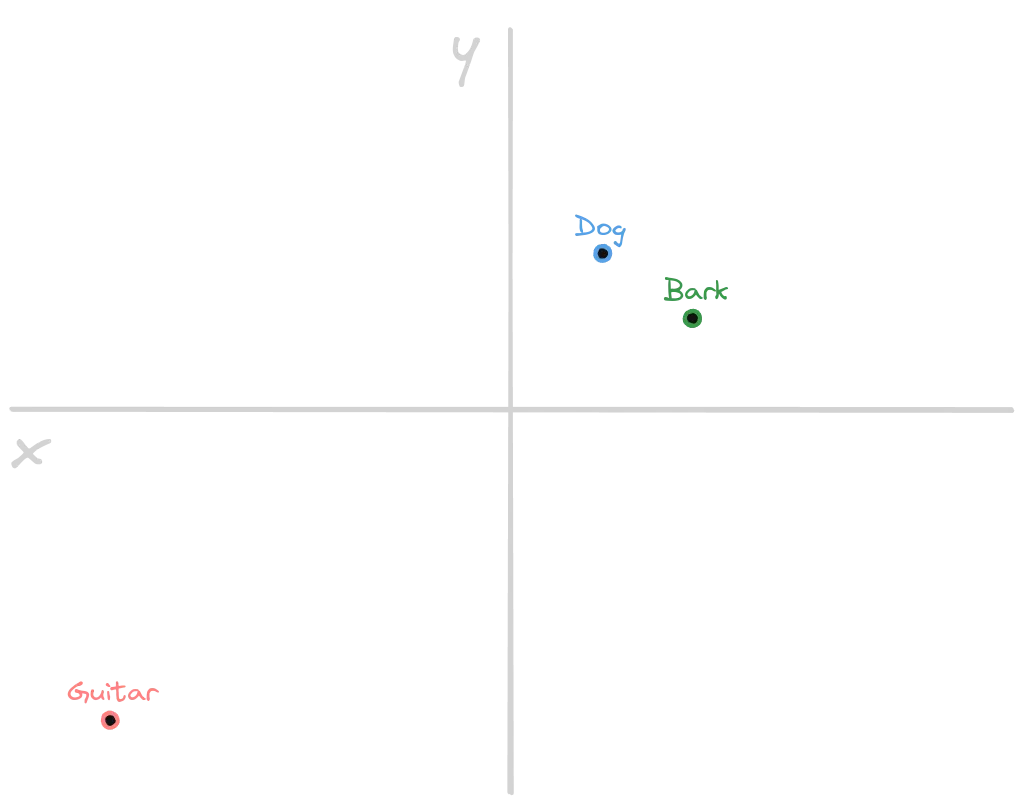

To understand how a vector database works, imagine a Cartesian plane with various points in different positions. Each point represents a piece of data of our imported documents.

Points that are close to each other represent related information. For example, the concepts "dog" and "bark" might be close to each other since dogs bark. On the other side, "dog" would be far from "guitar" since there is no significant relationship between these concepts.

The image below shows a Cartesian plane to illustrate this example:

The main difference between the vector database and the Cartesian plan metaphor is that while Cartesian plan has only 2 dimensions, vector databases can have hundreds or thousands dimensions. Hard to imagine, right?

In order to actually store concepts into a vector database, we first need to transform the concept into a correspondent coordinate, at the end of the process a word will be converted into a number. This transformation is one of the roles of an LLM.

Ollama

Ollama is an open-source platform designed to facilitate the deployment and usage of large language models (LLMs) on local devices rather than relying on cloud-based services.

We'll use Ollama with two different LLMs for two different purposes:

Embedding

The embedding process is a technique of representing objects, such as words, as vectors. The goal of embeddings is to capture the semantic relationships and similarities between objects in a way that can be used to perform computational tasks.

That's the process of transforming concepts into numbers that can be stored into the vector database that we talked earlier.

For this process we'll use the model nomic-embed-text. This model offers good performance and can handle long context tasks, which is essential since we need to provide documents of context into the prompt.

The prompt is the question or input given to an AI model that guides the AI in generating a response.

Text generation

The text generation is to, given a prompt, generate a response that looks like an human response. The goal here is to use the context provided by the external source (in this case, Chroma) to build a cohesive answer to the user's question.

For this process, we'll use the model llama3.1 (do not confuse with Ollama). Developed by Meta Inc., this model is currently the most capable openly available LLM, fine-tuned and optimized for chat use cases and outperforms many other open-source chat models.

Building the chat

Now that the core concepts are clear, we're ready to begin building.

Infrastructure

In this project we'll use Docker to manage the infrastructure components. So let's start by configuring it, below is the project's Docker Compose file:

# docker-compose.yml services: ollama: image: ollama/ollama:0.3.6 container_name: ollama restart: unless-stopped ports: - "11434:11434" chromadb: image: chromadb/chroma:0.5.5 container_name: chromadb restart: unless-stopped ports: - "8000:8000" environment: - IS_PERSISTENT=true

To start all containers run the following command:

$ docker compose up -d

With the containers now running, the next step is to pull the LLMs we'll be using. To do this, execute the following commands:

$ docker exec -it ollama bash $ ollama pull nomic-embed-text $ ollama pull llama3.1

Awesome! With the infrastructure set up, we're ready to proceed to the next steps.

Code

In this project, we'll use NodeJS 21.3 along with Fastify to handle HTTP requests. The choice of language and framework is based on my experience, but you can easily use Python if you prefer.

Let's start by configuring environment variables, create a .env file at the project's root with the following content:

# .env CHROMA_URL=http://localhost:8000 OLLAMA_URL=http://localhost:11434

Next, install all the required dependencies using the following commands:

$ yarn init -y $ yarn add @langchain/community@0.2.28 @langchain/core@0.2.31 @langchain/ollama@0.0.4 langchain@0.2.16 chromadb dotenv fastify@4.28.1

Langchain is a framework that will help us to handle LLM and ChromaDB operations. It's widely used when developing LLM applications.

With the dependencies installed, we’re ready to start coding. Let’s begin by creating a function that returns a Chroma instance:

// src/db.mjs import { Chroma } from "@langchain/community/vectorstores/chroma"; import { OllamaEmbeddings } from "@langchain/ollama"; export const getChromaInstance = async () => { const embeddings = new OllamaEmbeddings({ model: 'nomic-embed-text', baseUrl: process.env.OLLAMA_URL }); const client = new Chroma(embeddings, { url: process.env.CHROMA_URL, collectionName: 'rag' }); await client.ensureCollection(); return client; }

The code above initializes an OllamaEmbeddings instance using the model nomic-embed-text, as discussed earlier. We then create a Chroma instance by providing the embeddings, Chroma URL, and a collection name.

The embeddings parameter tells Chroma which LLM will generate the embeddings for storing in the vector database.

The collection serves as a grouping mechanism for embeddings. In this case, we’ve created a single collection for the project. Then we use the ensureCollection method to confirm that the collection exists.

// src/setup.mjs import { getChromaInstance } from './db.mjs'; import { RecursiveCharacterTextSplitter } from "langchain/text_splitter"; import { TextLoader } from "langchain/document_loaders/fs/text"; const splitter = new RecursiveCharacterTextSplitter({ chunkSize: 1000, chunkOverlap: 100, lengthFunction: (text) => text.length }); export const setup = async (request, reply) => { const json = request.body; const content = json.content; const buffer = Buffer.from(content, "utf8"); const blob = new Blob([buffer], { type: "text/plain" }); const loader = new TextLoader(blob); const loaded = await loader.load(); const docs = await splitter.splitDocuments(loaded); const chroma = await getChromaInstance(); await chroma.addDocuments(docs); return reply.status(200).send(); }

The code above retrieves content from the request, which represents the text we want to import into Chroma, such as the documentation from the introduction's example.

We then use TextLoader to create a document from this text and split it into smaller chunks. This approach enhances retrieval performance and provides better context from the database. The chunkSize variable determines the size of each document chunk, while chunkOverlap specifies the overlap between chunks. The overlap is essential because breaking the text in arbitrary positions might break sentences, potentially leading to embeddings that miss the nuances of the text.

As the last step we just import the splitted documents into Chroma and reply with a 200 status code.

Now let's build the query endpoint:

// src/template.mjs export const template = ` You’re skilled at teaching and having conversations. Here’s the context you should use: {context} Instructions: - Use only this context to answer - If the context doesn’t answer the question, just say you don’t have that info Question: {question} Answer: `;

// src/query.mjs import { getChromaInstance } from './db.mjs'; import { template } from './template.mjs'; import { formatDocumentsAsString } from "langchain/util/document"; import { PromptTemplate } from "@langchain/core/prompts"; import { Ollama } from "@langchain/ollama"; const llm = new Ollama({ model: 'llama3.1', baseUrl: process.env.OLLAMA_URL }); export const query = async (request, reply) => { const json = request.body; const query = json.query; const chroma = await getChromaInstance(); const docs = await chroma.similaritySearch(query); const context = formatDocumentsAsString(docs); const prompt = PromptTemplate.fromTemplate(template); const formatted = await prompt.format({ context, question: query, }); const prediction = await llm.stream(formatted); reply.header("Content-Type", "text/plain"); return reply.status(200).send(prediction); }

In the file template.mjs we define the template that will be used to ask the LLM for responses. The template specifies the LLM to use only the context provided from the documents to answer the query. The template also has some placeholders that will be replaced by the data coming from external sources.

In the query.mjs file, we first create an instance of the LLM using the model llama3.1 just like we talked earlier.

At the query function we extract the query from the request, then we make a similarity search at Chroma database. Remember, related concepts stays close in the Chroma's vector, so basically Chroma will transform the query into embeddings and compare with the already imported documents to find those that are related.

Next, we format the prompt by replacing the placeholders with the extracted query and the context retrieved from Chroma.

Finally we send the formatted prompt to the LLM using the stream method, which allows us to receive a continuous stream of the LLMs response. This enables us to implement a "ChatGPT-like" feature on the front-end, where the response is displayed in real time as it's generated.

The last step is to register the routes in the Fastify app and start the web server.

// src/main.mjs import { query } from './query.mjs'; import { setup } from './setup.mjs'; import fastify from "fastify"; import "dotenv/config"; const bootstrap = () => { const app = fastify(); app.post('/setup', setup); app.post('/query', query); return app; } const app = bootstrap(); app.listen({ port: 3000, host: '0.0.0.0' }, (err, address) => { if (err) { console.error(err); process.exit(1); } console.log(`Server listening at ${address}`); });

Running the project

To run the project, simply execute the main.mjs file:

$ node src/main.mjs

To import an information inside RAG you can use the following cURL command in your terminal:

$ curl -X POST http://localhost:3000/setup -H "content-type: application/json" -d '{"content": "The sky is red"}'

Please note that we're providing fake information here to demonstrate that the LLM relies exclusively on the imported data. Now, let’s go ahead and send a message:

$ curl -X POST http://localhost:3000/query -H "content-type: application/json" -d '{"query": "What color is the sky?"}'

The response should reflect the imported data, such as: "The sky is red."

You might notice that the request is quite slow. This is because, according to the documentation, Llama3 performs significantly better with a GPU. To optimize performance, you'll need a good GPU and must configure the Ollama Docker setup to utilize it. However, configuring GPU support is beyond the scope of this article.

I'll write an article about how to scale this chat soon, so stay tuned!

Conclusion

In this article, we learned how to build an AI-powered chat application from scratch, without using any cloud dependency.

If you have any questions or feedback, please feel free to send me a message on LinkedIn. If you enjoyed the article, consider sharing it on social media so that more people can benefit from it!